- News

- Reviews

- Bikes

- Accessories

- Accessories - misc

- Computer mounts

- Bags

- Bar ends

- Bike bags & cases

- Bottle cages

- Bottles

- Cameras

- Car racks

- Child seats

- Computers

- Glasses

- GPS units

- Helmets

- Lights - front

- Lights - rear

- Lights - sets

- Locks

- Mirrors

- Mudguards

- Racks

- Pumps & CO2 inflators

- Puncture kits

- Reflectives

- Smart watches

- Stands and racks

- Trailers

- Clothing

- Components

- Bar tape & grips

- Bottom brackets

- Brake & gear cables

- Brake & STI levers

- Brake pads & spares

- Brakes

- Cassettes & freewheels

- Chains

- Chainsets & chainrings

- Derailleurs - front

- Derailleurs - rear

- Forks

- Gear levers & shifters

- Groupsets

- Handlebars & extensions

- Headsets

- Hubs

- Inner tubes

- Pedals

- Quick releases & skewers

- Saddles

- Seatposts

- Stems

- Wheels

- Tyres

- Health, fitness and nutrition

- Tools and workshop

- Miscellaneous

- Cross country mountain bikes

- Tubeless valves

- Buyers Guides

- Features

- Forum

- Recommends

- Podcast

news

Google driverless car meets cyclist

Google driverless car meets cyclistThe ethics of self-driving car collisions: whose life is more important?

In an unavoidable collision involving a robotic driverless car, who should die? That’s the ethical question being pondered by automobile companies as they develop the new generation of cars.

Stanford University researchers are helping the industry to devise a new ethical code for life-and-death scenarios.

According to Autonews, Dieter Zetsche, the CEO of Daimler AG, asked at a conference: “if an accident is really unavoidable, when the only choice is a collision with a small car or a large truck, driving into a ditch or into a wall, or to risk sideswiping the mother with a stroller or the 80-year-old grandparent. These open questions are industry issues, and we have to solve them in a joint effort.”

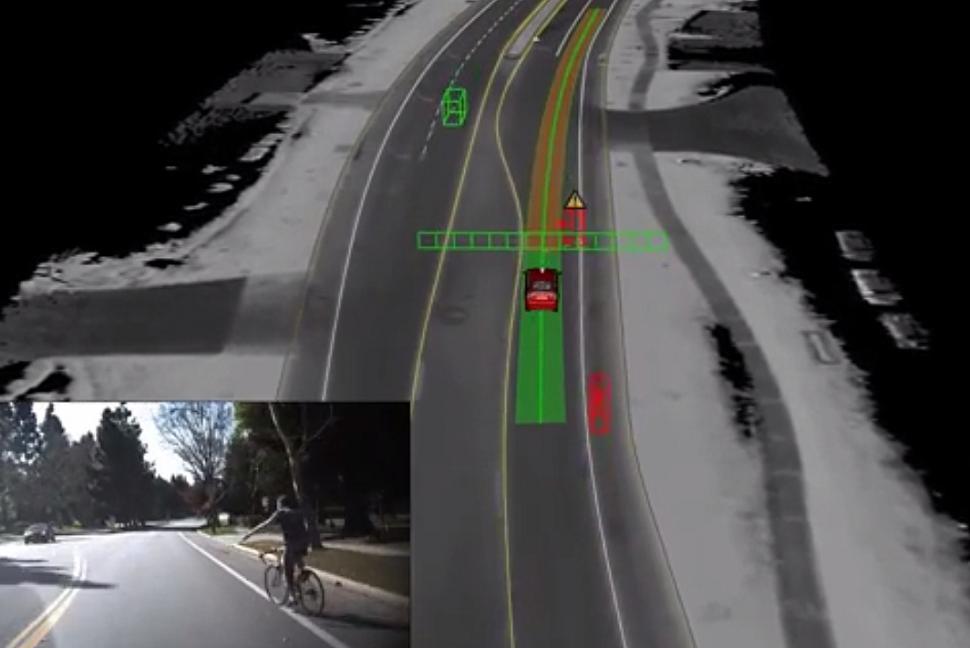

Google’s own self driving car gives cyclists extra space if it spots them in the lane, which theoretically puts the inhabitants of the car at greater risk of collision, but does it anyway. This is an ethical choice.

“Whenever you get on the road, you’re making a trade-off between mobility and safety,” Noah Goodall, a researcher at the University of Virginia.

“Driving always involves risk for various parties. And anytime you distribute risk among those parties, there’s an ethical decision there.”

Google is constantly making decisions based around information and safety risks, asking the following questions in a constant loop.

1. How much information would be gained by making this maneuver?

2. What’s the probability that something bad will happen?

3. How bad would that something be? In other words, what’s the “risk magnitude”?

In an example published in Google’s patent, says Autonews, “getting hit by the truck that’s blocking the self-driving car’s view has a risk magnitude of 5,000. Getting into a head-on crash with another car would be four times worse -- the risk magnitude is 20,000. And hitting a pedestrian is deemed 25 times worse, with a risk magnitude of 100,000.

“Google was merely using these numbers for the purpose of demonstrating how its algorithm works. However, it’s easy to imagine a hierarchy in which pedestrians, cyclists, animals, cars and inanimate objects are explicitly protected differently.”

We recently reported how Google has released a new video showing how its self-driving car is being taught to cope with common road situations such as encounters with cyclists. We’d far rather share the road with a machine that’s this courteous and patient than a lot of human drivers.

We’ve all been there. You need to turn across the traffic, but you’re not quite sure where, so you’re a bit hesitant, perhaps signalling too early and then changing your mind before finally finding the right spot.

Do this in a car and other drivers just tut a little. Do it on a bike and some bozo will be on the horn instantly and shouting at you when he gets past because you’ve delayed him by three-tenths of a nanosecond.

But not if the car’s being controlled by Google’s self-driving system. As you can see in this video, the computer that steers Google’s car can recognise a cyclist and knows to hold back when it sees a hand signal, and even to wait if the rider behaves hesitantly.

Latest Comments

- brooksby 2 sec ago

Or even better: make driving licences weight-restricted. AIUI you have to take a different test to be able to drive a minibus or a LGV or a HGV....

- NPlus1Bikelights 38 min 58 sec ago

I think buying anything off Covert Instruments would equate to going equipped over here.

- David9694 1 hour 13 min ago

Cromwell Road parking 'nightmare' next to Dorchester South...

- Sredlums 1 hour 46 min ago

I tried, but I just cannot in earnest believe that this question is sincere.

- Doctor Fegg 2 hours 57 min ago

Ah, British Cycling and the UCI. It's hard to think of two more universally beloved organisations. Thames Water and the National Rifle Association...

- David9694 3 hours 33 min ago

Car hits stone wall and overturns in smash outside Wiltshire pub...

- wtjs 4 hours 29 min ago

We get these 'high action rate' claims all the time, with little or no evidence of what the action was. People are, of course, entitled to consider...

- Bungle_52 4 hours 43 min ago

Thank you. Can't say I've ever seen one but I will keep a lookout in future.

- chrisonabike 4 hours 44 min ago

Part of me hopes they don't waste the money. If past performance is any guide poor resurfacing (particularly around utility access points), "works...

- SimoninSpalding 7 hours 16 min ago

I have Cors N:ext on my summer bike, mainly because they do a 24mm, 25 is REALLY tight on rear brake clearance. I am not impressed with how the...

Add new comment

69 comments

Oh yeah, another thing.

What happens when one of these cars is pulled over by the police for something like a lighting defect, bald tyre or lack of MOT? Is the person in the car responsible (if there is someone inside - lots of commenters predict them driving around empty)? What if the person inside is a child or perhaps heavily under the influence of drink (both suggested as legitimate ways for these vehicles to be used)?

I'm not opposed to the technology but the more I think about it the more I see pretty major barriers to it being used in the way many people predict. I can imagine cars coming with a sort of auto-pilot but progress beyond that is going to be a very long time coming.

How will the car know to pull over?

I kinda figured the car would identify an emergency vehicle with sirens and lights and pull over. That said I've got my doubts about it being smart enough to know whether it should be getting out of the way to let an ambulance past or stopping in a safe place for a chat with a police officer.

Given that the car will need some sort of wireless uplink to communicate with other autonomous vehicles, it's possible that the fire engine/ambulance/police car could communicate directly with it and say exactly what it is and what's going on and what to do next.

I'm not sure driverless cars will necessary communicate with each other in this way. I'd guess that they probably will but if they can cope with pedestrians, cyclists and drivers based only on what they 'see' they should be able to cope with each other too. Moreover, the first driverless cars on the roads will need to fit with the status quo. Our emergency services aren't going roll out new technology to all their vehicles and train all of their staff in its use it it prior to driverless hitting the roads. Initially, at least, the cars will need to know how to respond to lights and sirens.

Yeah these driverless cars will be wonderful and safe, except when it's raining and they can't function correctly. Or when a fly, or moth, or Sahara dust blows onto the camera. In those cases you will be doomed, but otherwise nothing can go wrong can go wrong can go wrong can go wrong. ..... bzzzzzzt system reboot blue screen of death

You say this as though they're releasing these cars into the wild tomorrow with known faults, or that the software won't fail gracefully in the event of something untoward happening.

That's what fail-safes and system planning are for, because things will go wrong and you try and mitigate the unwanted effects when they do. Personally, I think i'd probably favour a somewhat cautious autonomous vehicle over most of the idiots I see on the roads every day.

My concern is not quite as extreme at Ramz' but I wonder if the failsafes and cautious programming might b a downfall of these vehicles. This article has made me think back to a minor bump that I had in the car a few years ago. It was snowing heavily and the single-track road I was on was ice-rink slippery. two cars had previously lost control on a corner and crashed and I collided with one of them (although I was able to avoid hitting any of the people who were standing around the cars). I thought about what a self-driving car would have done differently in the same situation. I fear that the answer may be that it would have refused to make the journey in the first place or, worse, would have stopped at the point that it was unable to see/feel well enough to continue safely, leaving me stranded.

Another thought on this, should driverless cars put themselves in harm's way to prevent injury to vulnerable parties? For instance placing itself between another vehicle and a pedestrian when a collision is deemed imminent.

Although we are talking about rare events this is quite critical in the evolution of driverless cars. Here's another scenario:

Driverless car is overtaking a cyclist on a dual carriageway. Car is in the offside lane when a truck comes through the central reservation. The car can avoid a collision with the truck by swerving through the cyclist or maintain its course and brake to reduce the severity of the collision with the truck (which will still be a massive and impact). There's no right answer and that's really the problem. Cars that squash people rather than put their passengers at risk will not be tolerated and nobody is going to buy a car that prioritises the safety of anyone other than its occupants.

It could only take one instance of a driverless car making the 'wrong' decision to set back the progress of the technology massively so however rare these examples might be the protocol needs to be nailed down.

These incredibly rare scenarios are reminiscent of the much discussed 'trolley problem' and its variants about manipulating runaway trolleys on train tracks, from the philosophy of ethics.

Lots of people seem to be saying that it isn't a case of choosing who to kill. I agree, but the point in the article seems to me to be about how to balance the risks you are exposing various others to. The choice of speed and road positioning will always be a trade off seeking to minimise risk to all other road (and pavement) users, while also seeking to make good progress on the journey. These trade-offs have to be made ethically and sometimes raise quite challenging questions that humans don't even consciously bother to think about.

I expect Audi will still have the most aggressive software, as their target market will demand it!

The existence of these ethical decisions in driving is nothing new. Human drivers have been making them since... well, since we started walking. The existence of a hierarchy of "things to hurt" is not new either, it's just that up till now it has only existed in each individual's mind.

Ignoreing the ethics, just think of the fuel saving, no hard accerlerations, cars moving in convoy on streets so aerodynamic saving. Cities will become cleaner.

Better still there will be less or no need to own a car, so us cyclists won't have to move around parked cars on every street, hopefully.

Cars will not move in convoys, there is nothing magical about autonomous vehicles that will allow them to do this, I wish people would stop saying this. Autonomous cars will not be able to drive closer to the car in front than people already do on average. If you don't believe me then do a little research on braking distances and do the maths.

Autonomous vehicles could also use a lot more fuel than cars do today and cause more traffic. Today we do not have roads full of empty cars driving round, with autonomous cars that could change.

Upside of all of this is if autonomous cars really drive better around cyclists then cycling would become safer and more pleasant leading to a big uptake.

Most of the population here in the UK are in the cities, not out in the country, your argument seems to imply all city roads including most of the A and B-roads have their speed limits reduced to 15mph, because if you're going over 15mph and someone runs from pavement to road and you're going faster than 15mph then you won't have enough time to stop - autonomous or otherwise.

This strange bit of road has a 40mph speed limit directly followed by 'SLOW' written on the road!! Since it has pavement, should the cars slow to 15mph if there is someone on the pavement? With a dog or child? Currently most traffic is going at the 40mph speed limit.

Example

Yes, but a few of these cars, presumably the ones made on a Friday afternoon, will be plain nasty. They won't much care for humans and will quietly plan the end of our species, one car crash at a time. This is definitely true, Russell Brand told me.

Presumably these cars won't break speed limits. That will be a big change in traffic behaviour, especially when there is more than a certain critical number of them.

I think people struggle to understand that self driving cars will drive the way we're all supposed to, rather than driving a tiny bit better than all the rubbish drivers we see all day every day.

That and at some of these edge cases it will simply be the other person's fault.

So many silly arguments. If you're ever in a position where you have to choose between killing a cyclist, a grandmother, or the people in the car, it's because you've fucked up royally somewhere. Autonomous vehicles simply won't put themselves into these situations.

Sure, we might see a few deaths to begin with as a result of software issues and faults, naysayers will bask in their moment of glory, but these'll be ironed out and deaths on the roads will drop to a tiny percentile of what they are now.

Imagine a fleet of motorists that always obey the rules, don't take unneccessary risks, have unlimited patience, aren't affected by emotions, drive super efficiently, and have 360 degree situational awareness. Sounds like bliss? That's what autonomous motoring will be.

If you follow the rules of the road, ethics don't really play a part. Not killing people isn't hard.

Not necessarily. Someone who doesn't have priority might have just moved into the space in front of you and not given you time to stop. The rules of the road don't say you have to drive slowly enough to allow that to happen safely at any time.

If you don't have time to stop, you're driving too close, passing too fast, or you're not aware of what's going on around you. If all vehicles were autonomous, they won't do such silly things as pull out into another car's path anyway. Besides, your point is completely moot, as an autonomous vehicle is going to react far quickly to such a bullshit scenario than a human driver.

Look at the statistics of the Google cars. Every single incident (all minor) have been caused by humans. Collectively they've driven 700,000 miles, (which is more than most people drive in a lifetime), without a single fault accident. They're simply not having to make these "do I kill the granny or the cyclist" decisions, because they avert those situations. And this is with the fact that they are undoubtedly driving on roads with a lot of distracted people ogling at the Google vehicle.

Really? Even if someone in a different lane swerves into your path without warning and slams on their brakes? Or a pedestrian or cyclist moves out from the pavement just in front of you without warning? I think if we were to allow those sort of things to really be safe there would have to be large empty buffer zones between lanes anywhere that people drive at more than a few mph.

Correct. You're giving scenarios that autonomous cars will be better equipped to deal with. An autonomous car will spot that pedestrian on the pavement a mile off and give a wide berth and appropriate speed. A human won't. Even the Google car senses when cars in the lane next to it speed up to pull in front of it.

An autonomous vehicle won't pull in front of another and slam on its brakes so that point is moot.

You're talking about real fringe cases. We have something that will revolutionise the way that we travel. And you're trying your best to find a situation involving a suicidal cyclist or pedestrian that might result in an injury. It's a pointless stance to take, and this kind of crap thinking stifles innovation.

I stand by my point (which you're not acknowledging, you're picking the dregs of the discussion), these vehicles aren't making these decisions of choosing who to kill. I mean, how often do humans even have to make that decision? Why is this now a big topic for debate? It's a complete non-issue if you look at the whole picture. The autonomous vehicles will take the course of best evasive action, and on the rare occurrence of a collision with a total fool that runs out and throws themselves in front, people like you will come to the front with pitchforks saying "we told you so", ignoring the 99% reduction in accidents.

I agree that these are extremely rare situations. A good driver should expect to go through a lifetime of frequent driving without causing any serious injuries. And I think self driving cars will be significantly safer than human-driven cars.

At least initially, autonomous cars will need to co-exist with human driven cars on the roads, so they may need to take evasive action when a human suddenly creates a hazard.

I'm not sure what stance you think I'm taking. I think the reason this is being talked about, is that with humans we can just say these are rare events, almost anything a person decides to do in such a difficult situation would be reasonable, and leave it that. With computer controlled system the decision making process needs to be written down in advance, so there is a chance to debate whether or not it is the right process.

I agree it's a not a big issue in the scheme of things.

I don't feel the need to acknowledge everything you write - I've just replied to the bits that I had something to say about. If you inserted "almost certainly" before "because you've fucked up royally somewhere" then I would agree with that sentence.

Completely fair points, thank you and well said.

That last paragraph though, could you please outline a scenario where you need to choose between killing a cyclist, a grandmother, or the vehicle occupants where you haven't royally fucked up? Just one scenario. If you can do this, I'll edit my post to include the word "almost certainly". I just can't fathom how you can ever be in that situation.

Ok, that three way choice scenario is quite a challenge. I'll try.

You're driving on a straight two lane 40mph road at 30mph. A cyclist on the opposite lane riding towards you swerves right into your path to avoid a skip full of rubble on their lane. There is a grandmother (although I don't know how you'd know she was a grandmother) walking along a pavement on your side of the road. You can go straight into the cyclist, right into the skip, or left into the grandmother.

Thank you for accepting my challenge - chapeau!

- chapeau!

I'm sorry but if you're driving along and you see a skip with a cyclist heading towards it, it's pretty obvious he isn't going to cycle through the skip. You've fucked up if you can't predict his change of path.

If you can't see the cyclist because it's a sharp bend and the skip is blocking the view, you proceed slowly and with caution. There's no need to make a decision of who to kill.

Ok fair point. Let's say the cyclist isn't riding towards the skip, they are hiding stationary just behind it, facing sideways into the road. They are low enough that you can't see them over the skip, and they ride out across your path a few meters ahead of you,

Pages